written by Lant Pritchett

At a recent holiday party I was discussing organizations and innovations with a friend of mine who teaches at the Harvard Business School about organizations and is a professor and student about technology and history. I told him I was thinking about the lessons for the development “best practice” mantra from the AK47 versus M16 debate. Naturally, he brought out his own versions of both for comparison, the early Colt AR-15 developed for the US Air Force (which became the M16) and an East German produced AK-47.

Development practice can learn from the AK47. It is far and away the most widely available and used assault rifle in the world. This is in spite of the fact that it is easy to argue that the M16 is the “best practice” assault rifle. A key question for armies is whether in practice it is better to adopt the weapon to the soldiers you have or train the soldiers you have to the weapon you want. The fundamental AK47 design principle is simplicity which leads to robustness in operation and effective use even by poorly trained combatants in actual combat conditions. In contrast, the M16 is a better weapon on many dimensions—including accuracy–but only works well when used and cared for by highly trained and capable soldiers.

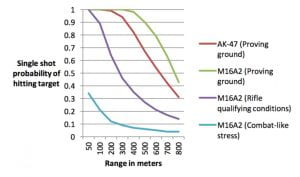

One important criterion for any weapon is accuracy. In the 1980s the US military compared the AK47 versus the M16 for accuracy at various distances in proving ground conditions that isolated pure weapon accuracy. The following chart shows the single shot probabilities of hitting a standard silhouette target at various distances in proving ground conditions. It would be easy to use this chart to argue that the M16 is a “best practice” weapon as at middle to long distances the single shot hit probability is 20 percent higher.

Figure 1: At proving ground conditions the AK47 is a less accurate weapon than the M16A1 at distances above 200 yards

Source: Table 4.3, Weaver 1990.

Source: Table 4.3, Weaver 1990.

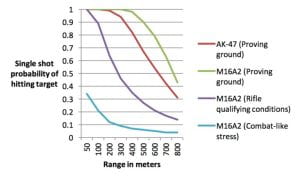

The study though also estimates the probability of hitting a target when there are aiming errors of an actual user of the weapon. In “rifle qualifying” conditions the shooter is under no time or other stress in shooting and knows the distance to target and hence ideal conditions for shooter to demonstrate high capacity. In “worst field experience” conditions the shooter is under high or combat-like stress, although obviously these data are from simulations of stress as it is impossible to collect reliable data from actual combat.

It is obvious in Figure 2 that over most of range at which assault rifles are used in combat essentially all of the likelihood of missing the target comes from shooter performance and almost none from the intrinsic accuracy of the weapon. The M16 maintains a proving ground conditions hit probability of 98 percent out to 400 yards but at 400 yards even a trained marksman in zero stress conditions has only a 35 percent chance and under stress this is only 7 percent.

Figure 2: The intrinsic accuracy of the weapon as assessed on the proving is not a significant constraint to shooter accuracy under high stress conditions of shooting

Source: Table 4.2, Weaver 1990.

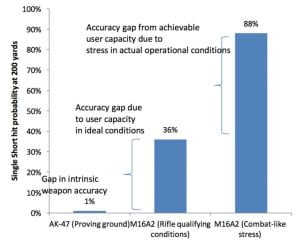

At 200 yards we can decompose the difference from the ideal conditions of “best practice”–the M16 on the proving ground has 100 percent hit probability—and the contribution of a less accurate weapon, user capacity even in ideal conditions, and user performance under stress. The AK47 is 99 percent accurate, but in in rifle qualifying conditions the hit probability is only 64 percent with the M16 and in stressed situations only 12 percent with the M16. So if a shooter misses with an AK47 at 200 yards in combat conditions it is almost certainly due to the user and not the weapon. As the author puts it (it what appears to be military use of irony) while there are demonstrable differences in weapon accuracy they are not really an issue in actual use conditions by actual soldiers:

It is not unusual for differences to be found in the intrinsic, technical performance of different weapons measured at a proving ground. It is almost certain that these differences will not have any operational significance. It is true, however that the differences in the…rifles shown…are large enough to be a concern to a championship caliber, competition shooter.

Figure 3: Decomposing the probability of a miss into that due to weapon accuracy (M16 vs AK47), user capacity in ideal conditions, and operational stress

Source: Figure 2 above, Weaver 1990.

The AK-47’s limitations in intrinsic accuracy appear to be a technological trade-off and an irremediable consequence of the commitment to design simplicity and operational robustness The design of the weapon has very loose tolerances which means that the gun can be abused in a variety of ways and not properly maintained and yet still fire with high reliability but this does limit accuracy (although the design successor to the AK-47, the currently adopted AK-74 did address accuracy issues). But a weapon that fires always has higher combat effectiveness than a weapon that doesn’t.

While many would argue that the M16 in the hands of a highly trained professional soldier is a superior weapon, this does require training and adapting the soldier and his practices to the weapon. The entire philosophy of the AK-47 is to design and adapt the weapon to soldiers who likely have little or no formal education and who are expected to be conscripted and put into battle with little training. While it is impossible to separate out geopolitics from weapon choice, estimates are that 106 countries’ military or special forces use the AK-47—not to mention its widespread use by informal armed groups—which is a testament to its being adapted to the needs and capabilities of the user.

Application of ideas to basic education in Africa

Now it might seem odd, or even insensitive, to use the analogy of weapon choice to discuss development practice, but the relative importance of (a) latest “best practice” technology or program design or policy versus (b) user capacity versus (c) actual user performance under real world stress as avenues for performance improvement arises again and again in multiple contexts. There is a powerful, often overwhelming, temptation for experts from developed countries to market the latest of what they know and do as “best practice” in their own conditions without adequate consideration of whether this is actually addressing actual performance in context.

The latest Service Delivery Indicators data that the World Bank has created for several countries in Sub-Saharan Africa illustrate these same issues in basic education.

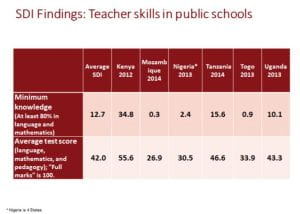

The first issue is “user capacity in ideal conditions”—that is, do teachers actually know the material they are supposed to teach? The grade 4 teachers were administered questions from the grade 4 curriculum. On average only 12.7 percent of teachers scored above 80 percent correct (and this is biased upward by Kenya’s 34 percent as four of six countries’ teachers were at 10 percent or below). In Mozambique only 65 percent of mathematics teachers could do double digit subtraction with whole numbers (e.g. 86-55) and only 39 percent do subtraction with decimals—and less than 1 percent of teachers scored above 80 percent.

Figure 4: Teachers in many African countries do not master the material they are intended to teach—only 13 percent of grade 4 teachers score above 80 percent on the grade 4 subject matter

Source: Filmer (2015) at RISE conference.

A comparison of the STEP assessment with the PIAAC assessment of literacy in the OPECD found that the typical tertiary graduate in Ghana or Kenya has lower literacy proficiency then the typical OECD adult who did not finish high school. A comparison of the performance on TIMSS items finds that teachers in African countries like Malawi and Zambia score about the same as grade 7 and 8 students in typical OECD countries like Belgium.

So, even in ideal conditions in which teachers were present and operating at their maximum capacity their performance would be limited by the fact that they themselves do not fully master the subject matters at the level they are intended to teach it.

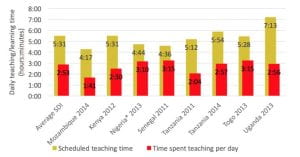

The second issue is the performance under “operational stress”—which includes both the stresses of life that might lead teachers to not even reach school on any given day as well as the administrative and other stresses that might lead teachers to do less than their ideal capacity. The Service Delivery Indicators measure actual time teaching versus the deficits due to absence from the school and lack of presence in the classroom when at the school. The finding is that while the “ideal” teaching/learning time per day is five and a half hours students are actually only exposed to about 3 hours a day of teaching/learning time on average. In Mozambique the learning time was only an hour and forty minutes a day rather than the official (notional) instructional time of four hours and seventeen minutes.

On top of this pure absence the question is whether under the actual pressure and stress of classrooms even the teaching/learning time is spent at the maximum of the teacher’s subject matter and pedagogical practice capacity.

Figure 5: Actual teaching/learning time is reduced dramatically by teacher absence from school and classroom

Source: Filmer (2015) at RISE conference

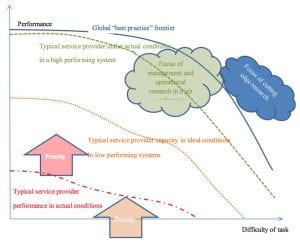

The “global best practice” versus performance priority mismatch

The range in public sector organizational performance and outcomes across the countries of the world is vast in nearly every domain—education, health, policing, tax collection, environmental regulation (and yes, military). In some very simple “logistical” tasks there has been convergence (e.g. vaccination rates and vaccination efficacy are very high even in many very low capability countries) but the gap in more “implementation intensive” tasks is enormous. Measures of early grade child mastery of reading range from almost universal—only 1 percent of Philippine 3rd graders cannot read a single word of linked text whereas 70 percent of Ugandan 3rd graders cannot read at all.

This means that in high performing systems the research questions are pushed to the frontiers of “best practice” both in technology and the applied research of management and operations. There is no research or application of knowledge in improving performance in tasks that are done well and routinely in actual operational conditions by most or nearly all service providers. That is taken for granted and not a subject of active interest. There is research interest in improving the frontier of possibility and interest in practical research into how to increase the capacity of the typical service provider and their performance under actual stressed conditions—but in high performing systems these are both aimed at expanding the frontier of actual and achieved practice in the more difficult tasks. This learning may be completely irrelevant to what is the priority in low performing systems. Worse, attempts to transplant “best practice” in technology or organizations or capacity building that is a mismatch for actual capacity or cannot be implemented in the current conditions may lead to distracting national elites from their own conditions and priorities.

What are the lessons of the “best practice” successes of the Finnish schooling system for Pakistan or Indonesia or Peru? What are the lessons of Norway’s “best practice” oil revenue stabilization fund for Nigeria or South Sudan? What are the lessons of OECD “best practice” for budget and public financial management for Uganda or Nepal? I am confident there are interesting and relevant lessons to learn, but the experience of the AK-47 should give some pause as to whether a globally relevant “best practice” isn’t a pipe dream.

Figure 6: Potential mismatch of global “best practice” and research performance priorities in low performance systems.